LLM-based Political Stance Classification

ML&AI Academic Research • Vancouver, BC

LLM-based Political Stance Classification

View on GitHub

Key Accomplishments

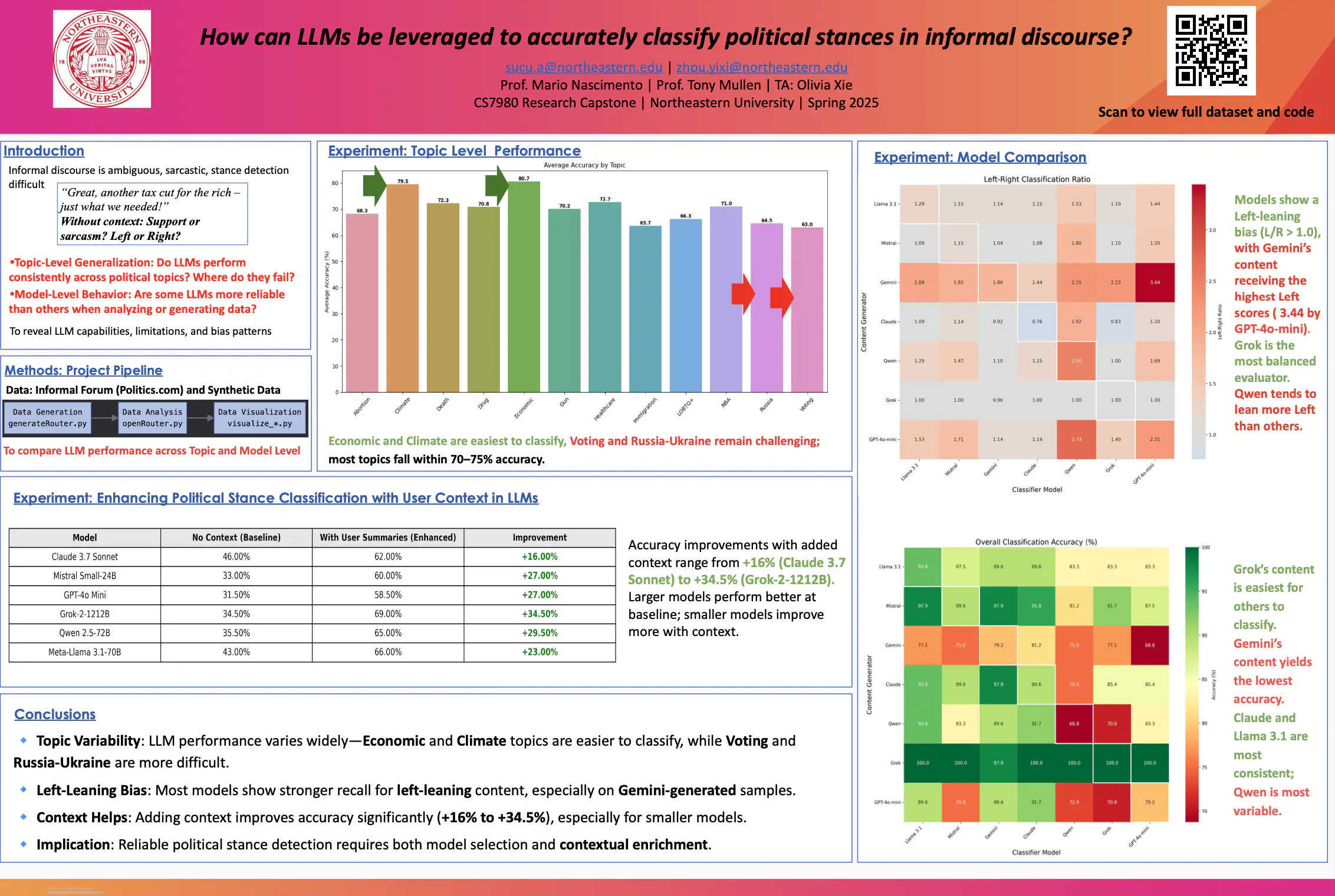

Engineered a political stance classifier using RAG and hybrid NLP-graph techniques, achieving 90% accuracy by fine-tuning large language models and designing prompt templates to improve generalization across domains.

Constructed scalable embedding pipelines with LangChain, pgvector, and spaCy-based NLP preprocessing, aggregating over 70,000+ social media textual records (Twitter, Reddit) to enhance training diversity and robustness.

Benchmarked open-source and proprietary LLMs (Claude, Gemini, Mistral, LLaMA) across 10+ political topics, applying cross-model bias mitigation and visualizing results in Tableau to assess fairness.

Technologies Used

Hugging Face, PyTorch

RAG, LangChain, pgvector

spaCy, NLP preprocessing

Claude, Gemini, Mistral, LLaMA

Tableau